3.2: Spark pre-built for Apache Hadoop 3.2 and later (default) Note that this installation way of PySpark with/without a specific Hadoop version is experimental. 2.7: Spark pre-built for Apache Hadoop 2.7. Try running this inside “pyspark” to validate that it worked: spark.createDataFrame(). without: Spark pre-built with user-provided Apache Hadoop. Try out “pyspark”, “spark-submit” or “spark-shell”. js and overridden on a per-environment basis. That’s it – you’re all set! You’ve installed Spark and it’s ready to go. Kubernetes Cluster (DigitalOcean, GKE, AKS, EKS) Docker installed locally.

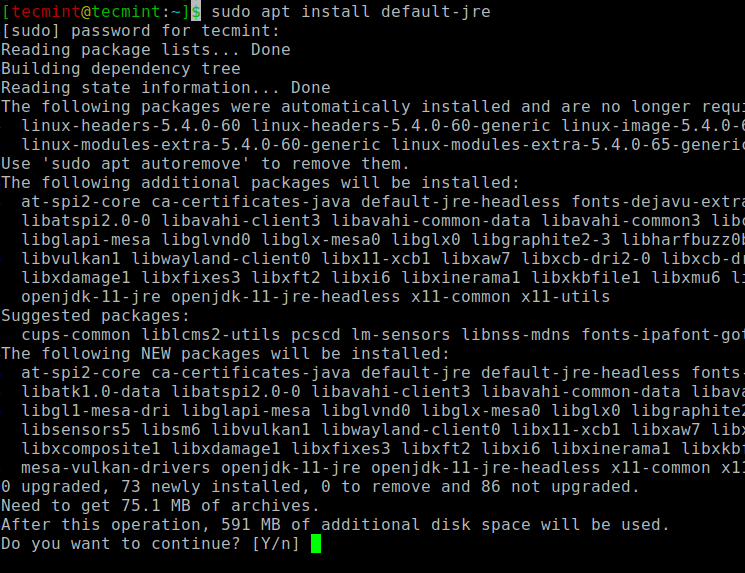

Set up environment variables to configure Spark: echo 'SPARK_HOME=$HOME/spark-2.3.1-bin-hadoop2.7' > ~/.bashrcĮcho 'PATH=$PATH:$SPARK_HOME/bin' > ~/.bashrcĮcho 'export PYSPARK_PYTHON=python3' > ~/.bashrc Spark Docker Container images are available from DockerHub, these images contain non-ASF software and may be subject to different license terms. Next, download and extract Apache Spark: wget & tar xf spark-2.3.1-bin-hadoop2.7.tgz Now, install OpenJDK 8 (Java): sudo apt update & sudo apt install -y openjdk-8-jdk-headless python It’s much simpler than AWS for small projects. It is easy to process and distribute work on large datasets across multiple computers. It is designed to offer computational speed right from machine learning to stream processing to complex SQL queries. If you need one of those, check out DigitalOcean. Apache Spark is a distributed open-source and general-purpose framework used for clustered computing.

Install apache spark digitalocean how to#

So here’s yet another guide on how to install Apache Spark, condensed and simplified to get you up and running with Apache Spark 2.3.1 in 3 minutes or less.Īll you need is a machine (or instance, server, VPS, etc.) that you can install packages on (e.g. Some guides get really detailed with Hadoop versions, JAR files, and environment variables. Some guides are for Spark 1.x and others are for 2.x. One thing I hear often from people starting out with Spark is that it’s too difficult to install.

0 kommentar(er)

0 kommentar(er)